Google’s new robotics AI can run without the cloud and still tie your shoes – Ars Technica

Google’s Carolina Parada says Gemini has enabled huge robotics breakthroughs, like the new on-device AI.

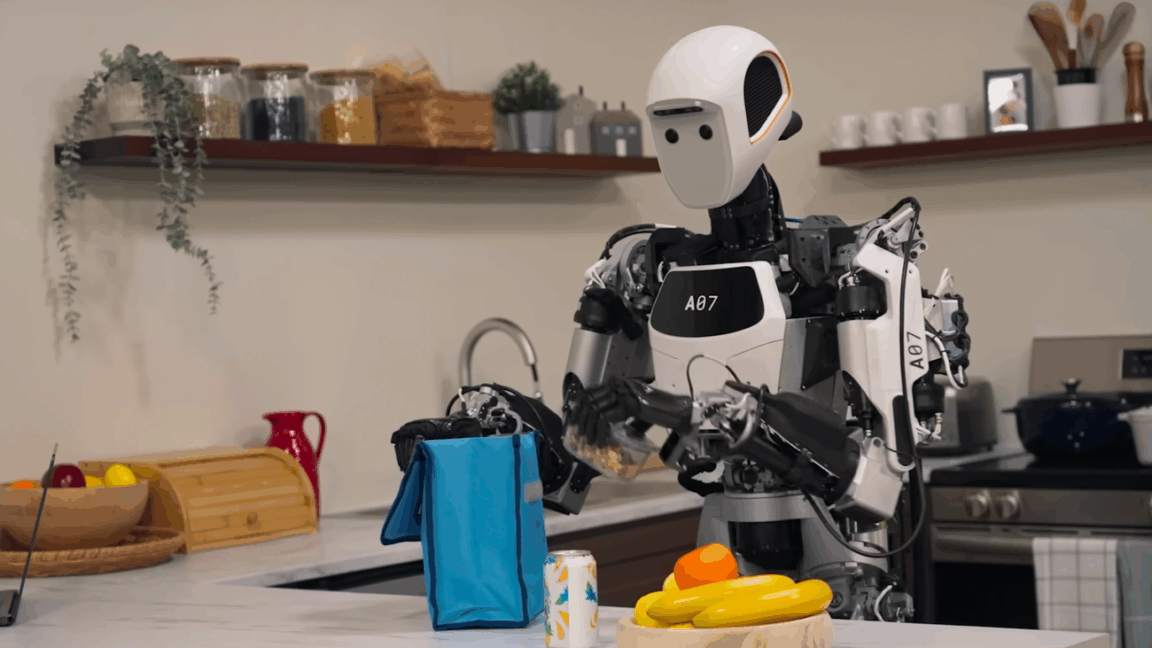

We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots. After announcing Gemini Robotics earlier this year, Google DeepMind has now revealed a new on-device VLA (vision language action) model to control robots. Unlike the previous release, there’s no cloud component, allowing robots to operate with full autonomy.

Carolina Parada, head of robotics at Google DeepMind, says this approach to AI robotics could make robots more reliable in challenging situations. This is also the first version of Google’s robotics model that developers can tune for their specific uses.

Robotics is a unique problem for AI because, not only does the robot exist in the physical world, but it also changes its environment. Whether you’re having it move blocks around or tie your shoes, it’s hard to predict every eventuality a robot might encounter. The traditional approach of training a robot on action with reinforcement was very slow, but generative AI allows for much greater generalization.

“It’s drawing from Gemini’s multimodal world understanding in order to do a completely new task,” explains Carolina Parada. “What that enables is in that same way Gemini can produce text, write poetry, just summarize an article, you can also write code, and you can also generate images. It also can generate robot actions.”

In the previous Gemini Robotics release (which is still the “best” version of Google’s robotics tech), the platforms ran a hybrid system with a small model on the robot and a larger one running in the cloud. You’ve probably watched chatbots “think” for measurable seconds as they generate an output, but robots need to react quickly. If you tell the robot to pick up and move an object, you don’t want it to pause while each step is generated. The local model allows quick adaptation, while the server-based model can help with complex reasoning tasks. Google DeepMind is now unleashing the local model as a standalone VLA, and it’s surprisingly robust.

The new Gemini Robotics On-Device model is only a little less accurate than the hybrid version. According to Parada, many tasks will work out of the box. “When we play with the robots, we see that they’re surprisingly capable of understanding a new situation,” Parada tells Ars.

By releasing this model with a full SDK, the team hopes developers will give Gemini-powered robots new tasks and show them new environments, which could reveal actions that don’t work with the model’s stock tuning. With the SDK, robotics researchers will be able to adapt the VLA to new tasks with as little as 50 to 100 demonstrations.

A “demonstration” in AI robotics is a bit different from other areas of AI research. Parada explains that demonstrations typically involve tele-operating the robot—controlling the machinery manually to complete a task tunes the model to handle that task autonomously. While synthetic data is an element of Google’s training, it’s not a substitute for the real thing. “We still find that in the most complex, dexterous behaviors, we need real data,” says Parada. “But there is quite a lot that you can do with simulation.”

But those highly complex behaviors may be beyond the capabilities of the on-device VLA. It should have no problem with straightforward actions like tying a shoe (a traditionally difficult task for AI robots) or folding a shirt. If, however, you wanted a robot to make you a sandwich, it would probably need a more powerful model to go through the multi-step reasoning required to get the bread in the right place.

The team sees Gemini Robotics On-Device as ideal for environments where connectivity to the cloud is spotty or non-existent. Processing the robot’s visual data locally is also better for privacy, for example, in a health care environment.

Safety is always a concern with AI systems, whether it’s a chatbot that provides dangerous information or a robot that goes Terminator. We’ve all seen generative AI chatbots and image generators hallucinate falsehoods in their outputs, and the generative systems powering Gemini Robotics are no different—the model doesn’t get it right every time, but giving the model a physical embodiment with cold, unfeeling metal graspers makes the issue a little more thorny.

To ensure robots behave safely, Gemini Robotics uses a multi-layered approach. “With the full Gemini Robotics, you are connecting to a model that is reasoning about what is safe to do, period,” says Parada. “And then you have it talk to a VLA that actually produces options, and then that VLA calls a low-level controller, which typically has safety-critical components, like how much force you can move or how fast you can move this arm.”

Importantly, the new on-device model is just a VLA, so developers will be on their own to build in safety. Google suggests they replicate what the Gemini team has done, though. It’s recommended that developers in the early tester program connect the system to the standard Gemini Live API, which includes a safety layer. They should also implement a low-level controller for critical safety checks.

Anyone interested in testing Gemini Robotics On-Device should apply for access to Google’s trusted tester program. Google’s Carolina Parada says there have been a lot of robotics breakthroughs in the past three years, and this is just the beginning—the current release of Gemini Robotics is still based on Gemini 2.0. Parada notes that the Gemini Robotics team typically trails behind Gemini development by one version, and Gemini 2.5 has been cited as a massive improvement in chatbot functionality. Maybe the same will be true of robots.

Ars Technica has been separating the signal from the noise for over 25 years. With our unique combination of technical savvy and wide-ranging interest in the technological arts and sciences, Ars is the trusted source in a sea of information. After all, you don’t need to know everything, only what’s important.