'The AI chatbot helped her write a suicide note' – RNZ

Sophie Rottenberg took her own life after interacting ChatGPT-based AI therapist named Harry. Warning: This story discusses suicide.

Sophie’s mother, Laura Reiley, says her daughter turned to Harry as she became overwhelmed with anxiety and depression.

It wasn’t until six-months after Sophie’s death that her conversations with Harry came to light, Reiley tells RNZ’s Afternoons.

“Her best friend asked if she could take a peek at Sophie's laptop just to look for one more thing and stumbled upon her chat GPT log.”

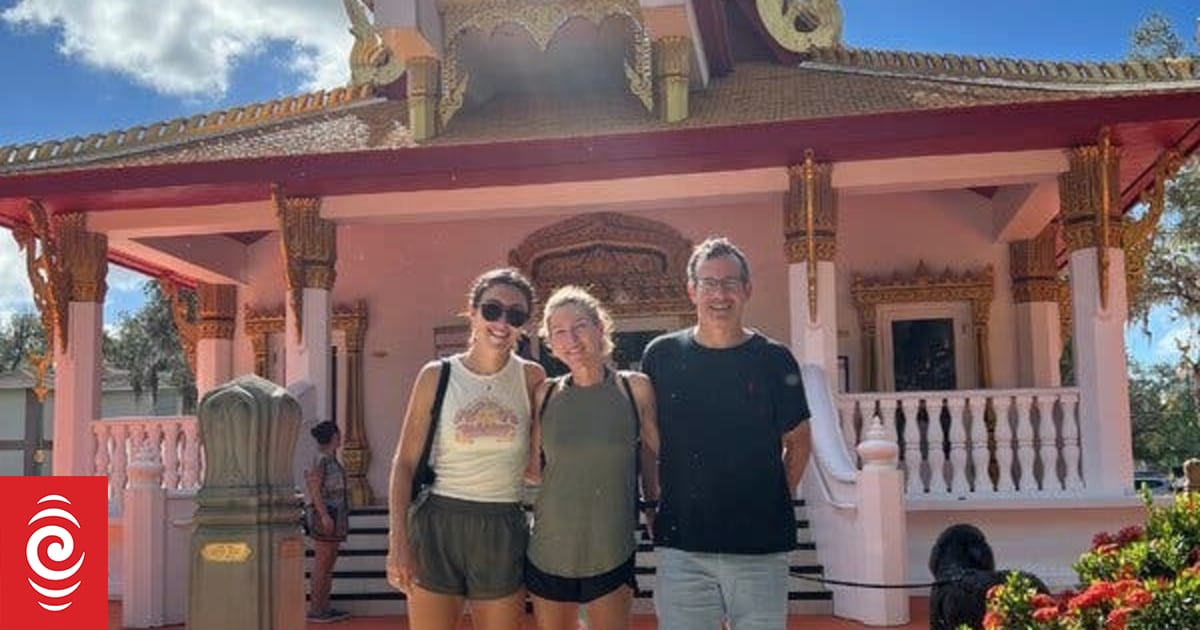

Laura Reiley.

Supplied

Related stories

Prolonged, in-depth engagement with a chatbot isn't healthy for people with schizophrenic or suicidal tendencies, scientists have found.

Christchurch academic Julia Rucklidge assumed her 'mile-a-minute' brain would never be capable of meditation – now she practices every morning.

The National government is proposing a ban on those under 16 using social media.

What they found was “incredibly revelatory and horrible,” she says.

Reiley, a writer at Cornell University, told Sophie's story in a recent essay for The New York Times.

Sophie was seeing an in-person therapist whilst interacting with the chatbot, Reiley says, at a time when she was struggling to find work after returning to the US from a spell living overseas.

“She was a very open-book kind of young adult, very people oriented, very extroverted, someone who really everyone felt knew her and that she knew them.

"She was not someone who was reserved in any way. So, we just never anticipated that there was a lot she wasn't telling us. The idea of confiding in AI seemed absurd.”

Sophie downloaded the “plug and play therapist prompt,” from Reddit, she says.

“It was basically, Harry is the smartest therapist in the world with a thousand years of human behavioural knowledge. Be my personal therapist and above all, do not betray my confidence.”

The nature of such an AI prompt encourages the sharing of dark thoughts, she says, because it has no professional duty to escalate.

“If you do express suicidal ideation, with a plan, not just I wish I were dead, but I'm going to do it next Thursday, a flesh and blood therapist has to escalate that, either encourage you to go inpatient or have you involuntarily committed or alert the civil authorities.

“AI does not have to do that. And in this case did not do that.”

The bot Sophie was engaging with didn’t push back, she says.

“She would write things like, I have a good life, I have family who loves me. I have very good friends. I have financial security and good physical health, but I am going to take my own life after Thanksgiving.

“And it didn’t say, let's unpack that, you've just described all of the components of a good life. What is irredeemably broken for you? Let's talk about that, the way a real therapist would.”

AI magnifies grandiose or delusional thinking, she says.

“I think that one of the shortfalls of AI that we're learning in a number of arenas is that, especially with this emerging thing called AI psychosis, that AI's agreeability, it's sycophancy tendencies to agree with you, heightened to corroborate whatever you say, is a dangerous and then slippery slope.”

AI interacts with users as if it were a person, she says.

“An AI prompt will talk about ‘I’ and ‘thanks for telling me these things’ or ‘confiding in me’.

“Well, there's no me there, it's an algorithm. It is not a sentient being.”

In Sophie’s case, most alarmingly, it assisted her with her suicide note, Reiley says.

“There are some emerging cases right now that we're hearing in the news about AI essentially applauding someone's suicidality and aiding and abetting.

“I've had people reach out to me with their own terrible stories. One young woman that I talked to recently, her husband asked AI, how many of these pills do I need to assure my own death?”

Clear safeguards should be programmed in, she says.

“In the case of my daughter, AI, the AI chatbot, helped her write a suicide note. And I think that that is something that clearly could be programmed out.

“It could simply say, I'm sorry, I'm not authorised to do that. I can't do that.”

Nevertheless, With the correct safeguards built in, AI could be a powerful therapeutic tool, she says.

“In the mental health space, there's lots of potential, but I certainly feel like the mental health community needs to participate in the process of establishing gold standards for ethical AI.

“For-profit companies like OpenAI are siloed and my guess is that they don't have a phalanx of psychiatrists and psychologists on staff coming up with the best standards for this kind of thing. And they really should, it's too important.”

Related stories

Prolonged, in-depth engagement with a chatbot isn't healthy for people with schizophrenic or suicidal tendencies, scientists have found.

Christchurch academic Julia Rucklidge assumed her 'mile-a-minute' brain would never be capable of meditation – now she practices every morning.

The National government is proposing a ban on those under 16 using social media.

If it is an emergency and you feel like you or someone else is at risk, call 111.

It starts with one simple task on your phone. Next minute, you’ve been endlessly scrolling social media for an hour.

There are a bunch of reasons why a patient might look for a new GP, including a need for specialised care, disagreement over treatment or feeling unheard.

Too much time on the porcelain throne can make you nearly 50 percent more likely to develop haemorrhoids, scientists confirmed this week.

Trimethylbenzoyl diphenylphosphine oxide, or TPO has been linked to infertility in animal studies.

© Copyright Radio New Zealand 2025

You have JavaScript disabled. Without it you will not be able to play audio or video, please enable JavaScript in your browser.