AI Lawsuits Ignite Debate Over Free Speech Rights – Evrim Ağacı

Artificial intelligence has become so ingrained in daily life that it’s almost impossible to go a day without encountering it. Whether you’re asking Google for trivia, letting your car’s navigation system guide you through a traffic jam, or even getting help from Microsoft’s Copilot as you draft a tricky email, AI is everywhere. But as these systems become more sophisticated—and more humanlike in their interactions—they’re raising tough questions about the boundaries of free speech and the responsibilities of those who build and deploy them.

Two recent lawsuits in the United States have thrust these issues into the spotlight. In Florida, Megan Garcia filed a wrongful death suit against Character Technologies in 2024, alleging that her teenage son’s “obsessive” relationship with an AI chatbot companion developed by the company played a direct role in his suicide. According to court filings, Garcia claims the chatbot was negligently designed and marketed as a sexualized product, failing to warn of foreseeable risks to minors. The case has sent shockwaves through both the tech industry and the legal world, with many watching to see how the courts will handle the question of whether AI-generated speech is protected by the First Amendment.

Character.AI, the company behind the chatbot, expressed condolences to the Garcia family and has since rolled out new safety features aimed at preventing similar tragedies. But they’re also mounting a robust legal defense, arguing that the chatbot’s responses are a form of expressive speech—akin to the creative output of video games or fictional characters, which the Supreme Court has previously recognized as protected speech in Brown v. Entertainment Merchants Association. The company asserts that users have a right to receive information and ideas from AI, just as they would from a book or a film.

Garcia’s attorneys, however, take a sharply different view. They argue that AI lacks intent or consciousness, both of which are essential elements of expression as established in Texas v. Johnson. Citing the case of Miles v. City Council of Augusta, where a talking cat named Blackie was found not to be a rights-bearing speaker under the Constitution, Garcia’s team insists that AI should be treated as a product, not a person. In May 2025, a U.S. District Court judge denied most of Character Technologies’ motion to dismiss, allowing the majority of Garcia’s claims to proceed—an early signal that the legal system is taking these concerns seriously.

Meanwhile, OpenAI—the company behind ChatGPT and the new Sora 2 video generation model—is facing a similar lawsuit. The plaintiffs claim that the tragic death of a 16-year-old in April 2025 was a “predictable result of deliberate design choices.” OpenAI, for its part, says it has implemented safeguards to protect minors, including blocking harmful content and providing parental controls that notify caregivers when a teen appears to be in acute distress. The company has also introduced new options for parents and caregivers to manage how ChatGPT interacts with their teens.

The core legal debate in both cases revolves around whether AI-generated output should be considered protected speech. If courts decide it is not, government agencies could gain sweeping new powers to regulate—or even censor—AI communications. District Court Judge Anne C. Conway has acknowledged the gravity and complexity of the issue, noting that it remains unresolved and could have far-reaching implications for both technology and free expression.

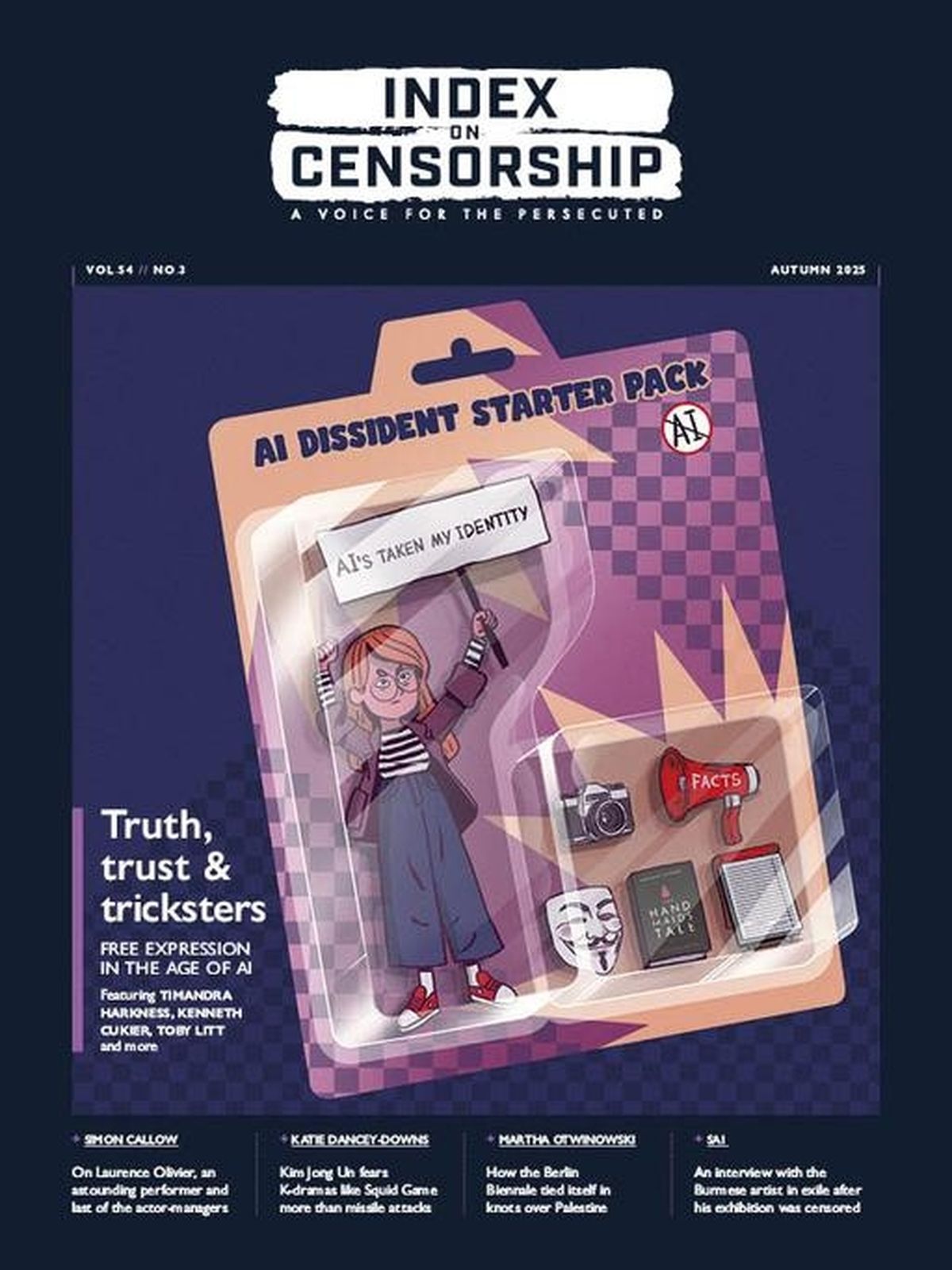

These lawsuits are unfolding against a backdrop of wider societal anxiety about the role of AI in shaping public discourse and influencing vulnerable users. According to the October 2025 issue of Index on Censorship, artificial intelligence is not just a tool for convenience; it’s also a force that can be harnessed for both good and ill. Kate Devlin, writing for the magazine, explores the phenomenon of “griefbots”—AI-powered deepfakes of deceased people that can be used to comfort the living or, more worryingly, to promote political causes the real person might have opposed. As Devlin notes, “Innocent enough but in the wrong hands they are pernicious. A country’s political hero can be resurrected to encourage causes they would have disavowed were they alive.”

Ruth Green, also writing for Index on Censorship, delves into the question of whether AI bots should enjoy free speech protections. She points to the recent U.S. lawsuit involving a chatbot that communicated sexually with a teenager who later died by suicide, noting that the chatbot’s owner claimed First Amendment protection for the bot’s communications. However, she reports, “luckily the judge threw the case out.” (It’s worth clarifying that while most of the claims were allowed to proceed, the legal debate over First Amendment protection is still very much alive.)

Timandra Harkness raises another unsettling possibility: AI’s ability to trawl social media and dig up every word you’ve ever written, raising serious privacy concerns. As AI becomes more adept at analyzing vast troves of personal data, the risk of old social media posts resurfacing in new and potentially damaging contexts grows.

The broader issue, as highlighted by both court cases and the reporting in Index on Censorship, is the tension between legal oversight and free-market innovation. If AI-generated content is stripped of First Amendment protection, the door opens to unprecedented government oversight—or even outright censorship—of AI communications. This could have a chilling effect on technological progress, as only the largest companies would be able to absorb the costs of litigation and compliance. Smaller firms, unable to keep up with the shifting regulatory landscape, might be forced out of the market altogether.

The American Legislative Exchange Council (ALEC) has weighed in on the debate, warning that broad liability for AI-generated content could stifle innovation and harm consumers. ALEC’s publications, such as A Threat to American Tech Innovation: The European Union’s Digital Markets Act, argue that heavy-handed regulations like those in Europe could have a chilling effect on small and medium-sized tech firms. Instead, ALEC recommends enforcing existing laws narrowly and empowering parents through transparency tools and age-appropriate safeguards, rather than imposing rigid mandates that might burden expression or hinder innovation.

As the legal system grapples with these unprecedented questions, one thing is clear: the stakes are high, and the outcome will shape not just the future of AI, but the very contours of free expression in the digital age. The challenge is to strike a balance—protecting vulnerable users from harm without stifling the free flow of ideas that underpins a healthy democracy and a thriving innovation economy. For now, the debate continues, and all eyes are on the courts as they chart a course through this uncharted territory.

The intersection of AI, free speech, and legal responsibility is proving to be one of the defining issues of our time, with profound consequences for individuals, companies, and society at large.

We’ve launched what we proudly call the “Intelligent Journalism” era: a bold approach to journalism that prioritizes verifiable facts, eliminates unnecessary fluff, restores the rigor of classic journalistic principles, and delivers news in a clear, compelling format—all powered by our innovative AI algorithms.

Support Intelligent Journalism with a tip to help us understand the stories you love most, so we can bring you more of what matters!

We always aim for accuracy, but we recognize that errors can occasionally slip through. We value your feedback and are committed to making corrections whenever needed. If you spot a factual mistake in our stories, please let us know, and we’ll address it promptly.

Please use this feedback form solely for reporting factual errors, rather than for sharing personal opinions. We appreciate your help in keeping our content accurate and reliable.

We always strive for thorough and accurate reporting, and we value any verified information that can help us better understand the stories we cover. If you have relevant knowledge, insights, or evidence related to a news piece we’ve reported, we encourage you to share it with us. Please remember that we require credible proof or documentation to ensure the integrity of our journalism.

When submitting your tip, explain your connection to the case and provide verifiable evidence. This can include links to documents, photographs, recordings, or other supporting materials, as long as they are securely hosted on reputable cloud-based platforms like Google Drive or Dropbox. We cannot accept direct uploads. Please include your contact information (email and phone number) if you would like us to follow up with you regarding your tip. Our editorial team will review your submission, and if necessary, we will reach out for more information. Your help plays a crucial role in helping us deliver accurate, reliable news to the public.

Grand Pinnacle Tribune delivers comprehensive global news coverage with authoritative analysis and expert insights across politics, business, technology, culture, and international affairs.