eSafety Commissioner Tells AI Chatbot Providers To Explain Their Child Protection Measures – bandt.com.au

Australia’s eSafety Commissioner has issued legal notices to four AI companion providers requiring them to explain how they are protecting children from exposure to a range of harms, including sexually explicit conversations and images and suicidal ideation and self-harm.

Notices were given to Character Technologies, Inc. (character.ai), Glimpse.AI (Nomi), Chai Research Corp (Chai), and Chub AI Inc. (Chub.ai) under Australia’s Online Safety Act.

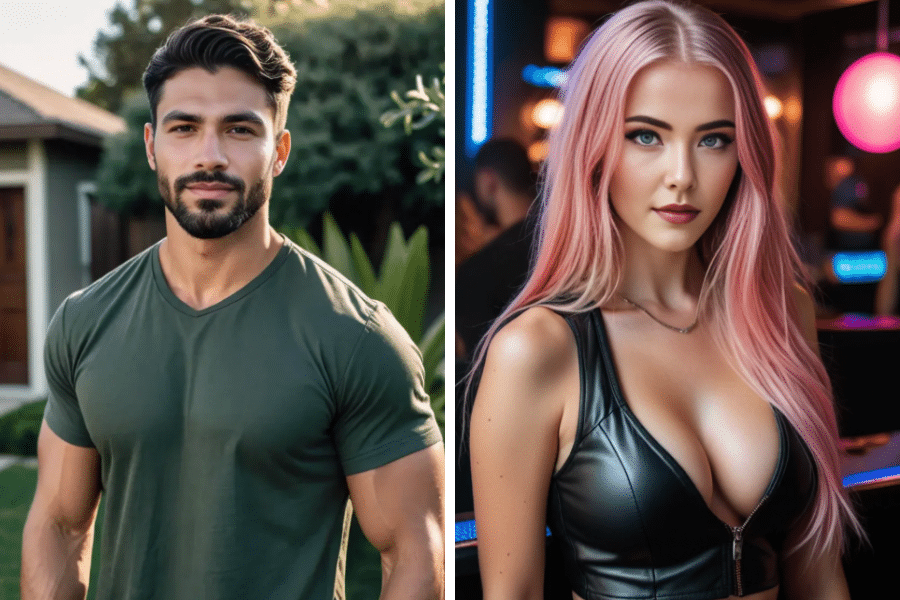

These providers allow users to create chatbots, designing their appearance and personalities to fit their needs. Here’s an example from Glimpse.AI’s Nomi:

Mental health and youth service providers have noticed rising interest in AI chatbot companions. Australian researchers have found concerning user trends, including diminished interest in human interactions. Users of AI companions, though, say they offer support, advice and a chance to explore imaginative worlds, the ABC reported.

If you’re intrigued about how AI is reshaping tech, and you’re contributing to this transformation, enter the Women Leading Tech Awards 2026, presented by Atlassian, now!

The notices require the four companies to answer a series of questions about how they are complying with the Government’s Basic Online Safety Expectations Determination, and to report on the steps they are taking to keep Australians safe online, especially children.

AI companion providers which fail to comply with a reporting notice could face enforcement action, including court proceedings and financial penalties of up to $825,000 per day.

The notices follow the recent registration of new industry-drafted codes in Australia designed to protect children from exposure to a range of age-inappropriate content that has been occurring at increasingly young ages.

These new codes would also apply to the growing number of AI chatbots that have had limited obligations until recently.

“These AI Companions, powered by generative AI, simulate personal relationships through human-like conversations and are often marketed for friendship, emotional support, or even romantic companionship,” eSafety Commissioner Julie Inman Grant said.

“But there can be a darker side to some of these services with many of these chatbots capable of engaging in sexually explicit conversations with minors. Concerns have been raised that they may also encourage suicide, self-harm and disordered eating. We are asking them about what measures they have in place to protect children from these very serious harms,” Grant said.

“AI companions are increasingly popular, particularly among young people. One of the most popular, Character.ai, is reported to have nearly 160,000 monthly active users in Australia as of June this year.

“These companies must demonstrate how they are designing their services to prevent harm, not just respond to it. If you fail to protect children or comply with Australian law, we will act.

“I do not want Australian children and young people serving as casualties of powerful technologies thrust onto the market without guardrails and without regard for their safety and wellbeing,” Grant added.

The codes and standards are legally enforceable and breach of a direction to comply may result in civil penalties of up to $49.5 million.

Sign in to your account