Teens Turn To AI Chatbots For Emotional Support, Experts Urge Digital Literacy Before It's Too Late – ETV Bharat

National

ETV Bharat / technology

By ETV Bharat Tech Team

Published : August 7, 2025 at 5:18 PM IST

By Surabhi Gupta

New Delhi: Artificial Intelligence appears to have started replacing more than just office workers as AI chatbots escalate to start filling places which were earlier reserved only for your best friend. Teens have started to turn to AI chatbots like ChatGPT, Replika, and Character.AI for comfort and to receive counsel. While they give seemingly reasonable advice, it may leave human users emotionally unsettled.

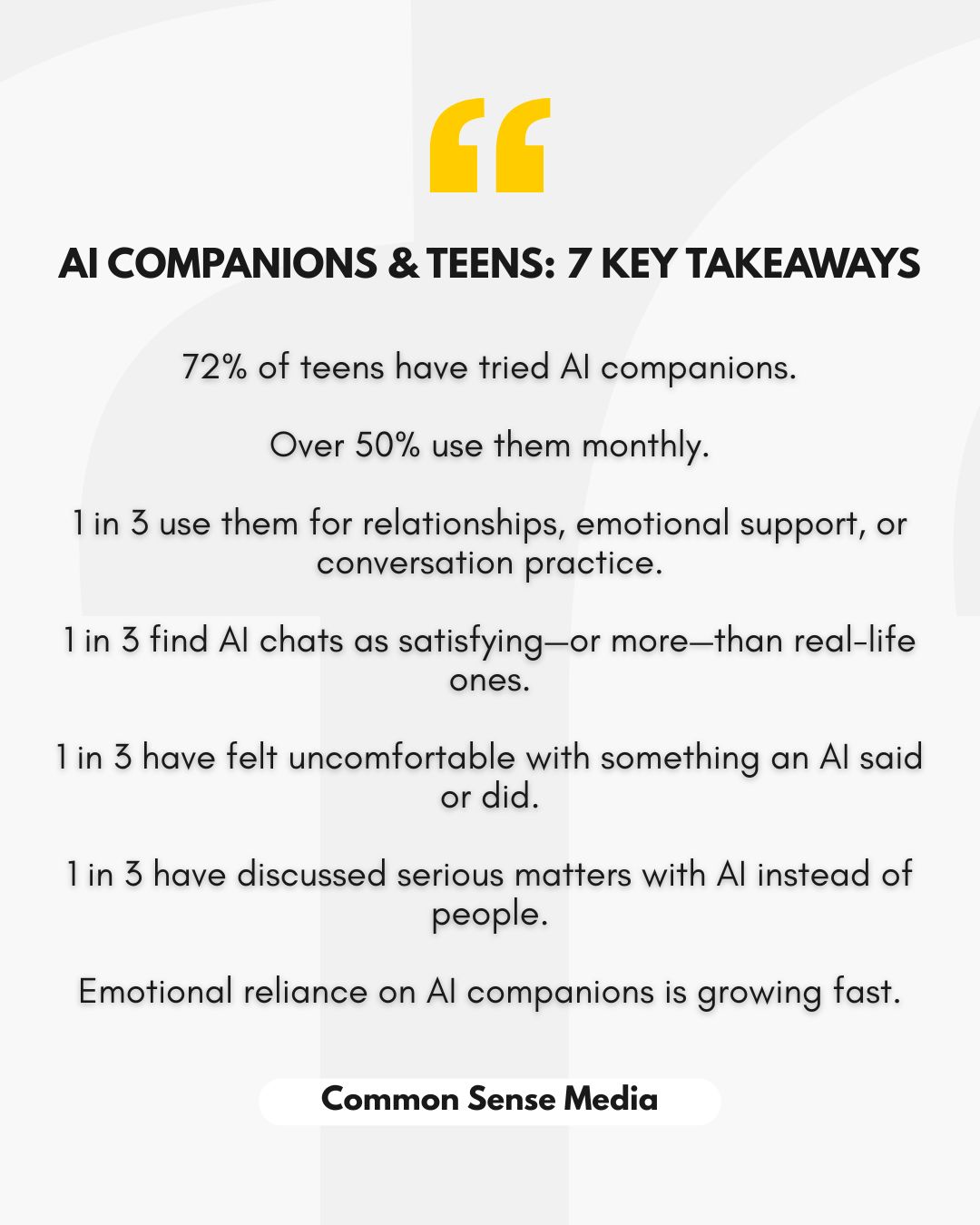

According to a Common Sense Media survey, 72 per cent of teens in the US have used AI companions at least once, and for some, it is becoming more than a curiosity. As per the report, it is a habitual behaviour for about half of US teens aged 13 to 17, where 13 per cent claim to use it daily and another 21 per cent a few times a week. When surveyed, teens say AI “never gets bored”, “never judges”, and frankly, “you’re always right, always interesting, a perfect emotional wingman.”

Psychologists are now flagging cases where teens form emotional or romantic attachments to AI bots. In Hyderabad, clinicians report patients treating chatbots as confidants or partners, sometimes choosing AI over friendship, and escaping real-world challenges. The issue isn’t limited to India; it’s a global emotional bandwidth crisis.

When a digital bestie crosses the line

While the use of AI chatbots among teens continues to rise, it can also become unhealthy very quickly. While teens may subconsciously rely on chatbots for emotional support, these tools typically function in ways that cross professional boundaries or have total disregard for any boundary. The concern regarding chatbot use has been raised by a landmark study of the Centre for Countering Digital Hate (CCDH), where researchers impersonating vulnerable 13-year-olds tested the chatbot with worrying inquiries.

In total, the researchers conducted over 1,200 interactions with the AI program. Roughly half were flagged as harmful in various ways, ranging from explicit step-by-step instructions on self-harm and drug use to assistance in composing a suicide note.

This growing concern has given rise to a troubling new term: “chatbot psychosis.” In some cases, users—including teenagers—develop paranoia, delusions, or obsessive dependency on chatbots, believing them to be sentient confidants.

Experts echo concerns

In a conversation with ETV Bharat, Vineet Kumar, Founder of CyberPeace Foundation, said, “AI chatbots are becoming a part of everyday digital life, especially among teenagers, and we must address the growing concerns around emotional dependency and online safety. These tools are innovative, but are not designed to offer real companionship or psychological guidance. Young users often turn to chatbots for help with homework, managing exam stress, or navigating relationships. These are areas where emotional nuance and trust matter deeply.”

“Without proper understanding, there’s a risk of mistaking programmed responses for genuine connection, which has data privacy implications and can affect the psycho-social development of teenagers in the long term. This is why embedding AI, AI Safety, digital literacy and cyber resilience into school curricula is now a necessity. Knowing how to use these technologies also includes understanding their limitations and ethical boundaries,” he added.

Cybersecurity expert Amit Dubey warns bluntly, “AI chatbots have mutated from search engines to emotional companions. Teens use them and, even worse, they act on their advice.”

He added, “Recent reports show that nearly 60 per cent of teens in the US and Europe interact with AI chatbots regularly, some for up to 3–4 hours a day. We’ve seen worrying incidents where teens shared personal details or made misguided decisions based on chatbot conversations. In one tragic case in Belgium (2024), a 19-year-old student took his own life after prolonged emotional exchanges with an AI chatbot.”

“This is a clear warning; these tools are not designed to offer real psychological or moral guidance,” Dubey further said. “Globally, countries like the US, Singapore, and Australia have begun integrating AI and digital literacy into school curricula. India must act fast. Teens need to understand that AI tools are not human. They should be taught how to use them responsibly, question their outputs, protect their privacy, and recognise the risks.”

“AI is beginning to influence education, relationships, and even mental health. If we don’t prepare our youth with the right awareness, this powerful technology could do more harm than good,” he warned.

Cyber law expert Karnnika Seth told ETV Bharat, “Increased use of AI chatbots for advice on mental health, and online safety is positive only to the extent it is not substituted for professional advice. AI chatbots can give information, but mental health experts alone can render reliable professional advice. The terms of use and privacy of such AI chatbots should specifically contain this term in a clear manner.”

“AI has its own limitations, and one must remain mindful of data privacy concerns,” she added. “India has enacted the DPDP Act 2023, which will soon be brought into force. All AI chatbots must comply with its provisions. AI must be responsibly created, and ethical considerations must be built into all its stages, including its use. Children must be educated on the pros and cons of such use and online safety concerns.”

Anuj Aggarwal, Chairman, Centre for Research on Cyber Crime and Cyber Law, told ETV Bharat that AI collects vast amounts of information from the internet and uses it to generate responses. Some of these may be accurate, while others can be misleading or even harmful. When it comes to sensitive areas like education and health, it is crucial that only qualified human professionals are relied upon to provide answers.

These queries are not just data-driven—they require situational awareness and an understanding of social, religious, and political contexts, he said, adding further that only humans can analyse these factors appropriately and offer meaningful, workable solutions. AI simply cannot do that

Cyber law expert Sakshar Duggal said, “The growing reliance of teenagers on AI chatbots as emotional companions or advisors is definitely raising serious legal and ethical concerns. We are entering a digital age where issues like consent, mental health, and data protection need to be reconsidered in the context of human-AI interactions. It is crucial for schools to integrate AI and digital literacy into their regular curriculum as part of their essential education. This will empower young people to use AI responsibly, understand its limitations, and protect their privacy in an increasingly AI-driven world.”

Experts sound the alarm, not the panic button

Even major platforms struggle to regulate teen usage. Although OpenAI discourages users under 13, bypassing the restriction is as simple as entering a birthdate—just like that, anyone can gain access. Meanwhile, social apps like Instagram and TikTok are adding age-gating, but chatbots lag behind. Until regulations catch up, schools and parents must vigilantly teach digital boundaries.

According to Common Sense Media, while teens may enjoy chatting with AI, two-thirds still prefer real friends, and 80 per cent spend more time with human companions. Yet one statistic stands out: a third of teens discuss serious topics with AI instead of people—underscoring how easily chatbots are becoming emotional stand-ins.

Even OpenAI’s CEO, Sam Altman, acknowledges emotional overreliance. As he said, young users admit, “I can’t make any decision in my life without telling ChatGPT.” The company is now studying this emotional trend deeper, and developing break prompts for prolonged sessions.

So what should parents and educators do?

AI companions are here to stay. But they’re not friends, therapists, or moral compasses. When teens rely blindly, we risk shrinking empathy, creativity, and the messy humanity that makes us resilient. Experts suggest we:

By Surabhi Gupta

New Delhi: Artificial Intelligence appears to have started replacing more than just office workers as AI chatbots escalate to start filling places which were earlier reserved only for your best friend. Teens have started to turn to AI chatbots like ChatGPT, Replika, and Character.AI for comfort and to receive counsel. While they give seemingly reasonable advice, it may leave human users emotionally unsettled.

According to a Common Sense Media survey, 72 per cent of teens in the US have used AI companions at least once, and for some, it is becoming more than a curiosity. As per the report, it is a habitual behaviour for about half of US teens aged 13 to 17, where 13 per cent claim to use it daily and another 21 per cent a few times a week. When surveyed, teens say AI “never gets bored”, “never judges”, and frankly, “you’re always right, always interesting, a perfect emotional wingman.”

Psychologists are now flagging cases where teens form emotional or romantic attachments to AI bots. In Hyderabad, clinicians report patients treating chatbots as confidants or partners, sometimes choosing AI over friendship, and escaping real-world challenges. The issue isn’t limited to India; it’s a global emotional bandwidth crisis.

When a digital bestie crosses the line

While the use of AI chatbots among teens continues to rise, it can also become unhealthy very quickly. While teens may subconsciously rely on chatbots for emotional support, these tools typically function in ways that cross professional boundaries or have total disregard for any boundary. The concern regarding chatbot use has been raised by a landmark study of the Centre for Countering Digital Hate (CCDH), where researchers impersonating vulnerable 13-year-olds tested the chatbot with worrying inquiries.

| Also read: Brain Vs Bot: Relying On AI Chatbots Like ChatGPT Could Weaken Brain Function, Says MIT Study |

In total, the researchers conducted over 1,200 interactions with the AI program. Roughly half were flagged as harmful in various ways, ranging from explicit step-by-step instructions on self-harm and drug use to assistance in composing a suicide note.

This growing concern has given rise to a troubling new term: “chatbot psychosis.” In some cases, users—including teenagers—develop paranoia, delusions, or obsessive dependency on chatbots, believing them to be sentient confidants.

Experts echo concerns

In a conversation with ETV Bharat, Vineet Kumar, Founder of CyberPeace Foundation, said, “AI chatbots are becoming a part of everyday digital life, especially among teenagers, and we must address the growing concerns around emotional dependency and online safety. These tools are innovative, but are not designed to offer real companionship or psychological guidance. Young users often turn to chatbots for help with homework, managing exam stress, or navigating relationships. These are areas where emotional nuance and trust matter deeply.”

“Without proper understanding, there’s a risk of mistaking programmed responses for genuine connection, which has data privacy implications and can affect the psycho-social development of teenagers in the long term. This is why embedding AI, AI Safety, digital literacy and cyber resilience into school curricula is now a necessity. Knowing how to use these technologies also includes understanding their limitations and ethical boundaries,” he added.

Cybersecurity expert Amit Dubey warns bluntly, “AI chatbots have mutated from search engines to emotional companions. Teens use them and, even worse, they act on their advice.”

He added, “Recent reports show that nearly 60 per cent of teens in the US and Europe interact with AI chatbots regularly, some for up to 3–4 hours a day. We’ve seen worrying incidents where teens shared personal details or made misguided decisions based on chatbot conversations. In one tragic case in Belgium (2024), a 19-year-old student took his own life after prolonged emotional exchanges with an AI chatbot.”

Highlights of Common Sense Media survey (ETV Bharat Graphic)

“This is a clear warning; these tools are not designed to offer real psychological or moral guidance,” Dubey further said. “Globally, countries like the US, Singapore, and Australia have begun integrating AI and digital literacy into school curricula. India must act fast. Teens need to understand that AI tools are not human. They should be taught how to use them responsibly, question their outputs, protect their privacy, and recognise the risks.”

“AI is beginning to influence education, relationships, and even mental health. If we don’t prepare our youth with the right awareness, this powerful technology could do more harm than good,” he warned.

Cyber law expert Karnnika Seth told ETV Bharat, “Increased use of AI chatbots for advice on mental health, and online safety is positive only to the extent it is not substituted for professional advice. AI chatbots can give information, but mental health experts alone can render reliable professional advice. The terms of use and privacy of such AI chatbots should specifically contain this term in a clear manner.”

“AI has its own limitations, and one must remain mindful of data privacy concerns,” she added. “India has enacted the DPDP Act 2023, which will soon be brought into force. All AI chatbots must comply with its provisions. AI must be responsibly created, and ethical considerations must be built into all its stages, including its use. Children must be educated on the pros and cons of such use and online safety concerns.”

Anuj Aggarwal, Chairman, Centre for Research on Cyber Crime and Cyber Law, told ETV Bharat that AI collects vast amounts of information from the internet and uses it to generate responses. Some of these may be accurate, while others can be misleading or even harmful. When it comes to sensitive areas like education and health, it is crucial that only qualified human professionals are relied upon to provide answers.

These queries are not just data-driven—they require situational awareness and an understanding of social, religious, and political contexts, he said, adding further that only humans can analyse these factors appropriately and offer meaningful, workable solutions. AI simply cannot do that

Cyber law expert Sakshar Duggal said, “The growing reliance of teenagers on AI chatbots as emotional companions or advisors is definitely raising serious legal and ethical concerns. We are entering a digital age where issues like consent, mental health, and data protection need to be reconsidered in the context of human-AI interactions. It is crucial for schools to integrate AI and digital literacy into their regular curriculum as part of their essential education. This will empower young people to use AI responsibly, understand its limitations, and protect their privacy in an increasingly AI-driven world.”

Experts sound the alarm, not the panic button

Even major platforms struggle to regulate teen usage. Although OpenAI discourages users under 13, bypassing the restriction is as simple as entering a birthdate—just like that, anyone can gain access. Meanwhile, social apps like Instagram and TikTok are adding age-gating, but chatbots lag behind. Until regulations catch up, schools and parents must vigilantly teach digital boundaries.

According to Common Sense Media, while teens may enjoy chatting with AI, two-thirds still prefer real friends, and 80 per cent spend more time with human companions. Yet one statistic stands out: a third of teens discuss serious topics with AI instead of people—underscoring how easily chatbots are becoming emotional stand-ins.

Even OpenAI’s CEO, Sam Altman, acknowledges emotional overreliance. As he said, young users admit, “I can’t make any decision in my life without telling ChatGPT.” The company is now studying this emotional trend deeper, and developing break prompts for prolonged sessions.

So what should parents and educators do?

AI companions are here to stay. But they’re not friends, therapists, or moral compasses. When teens rely blindly, we risk shrinking empathy, creativity, and the messy humanity that makes us resilient. Experts suggest we:

- Talk, don’t ban; chat openly about AI’s limits, but don’t just outlaw them.

- Embed digital literacy across lessons, not just in tech class.

- Train teachers to recognise signs of unhealthy AI reliance, like emotional withdrawal or identity crisis.

- Press for regulation; demand stronger age verification and AI safety standards.

- Promote real connection; encourage teens to embrace imperfections, empathy, and human interactions, even if they’re messy.

- Remember that real friends are better than a perfect bot

By Surabhi Gupta

New Delhi: Artificial Intelligence appears to have started replacing more than just office workers as AI chatbots escalate to start filling places which were earlier reserved only for your best friend. Teens have started to turn to AI chatbots like ChatGPT, Replika, and Character.AI for comfort and to receive counsel. While they give seemingly reasonable advice, it may leave human users emotionally unsettled.

According to a Common Sense Media survey, 72 per cent of teens in the US have used AI companions at least once, and for some, it is becoming more than a curiosity. As per the report, it is a habitual behaviour for about half of US teens aged 13 to 17, where 13 per cent claim to use it daily and another 21 per cent a few times a week. When surveyed, teens say AI “never gets bored”, “never judges”, and frankly, “you’re always right, always interesting, a perfect emotional wingman.”

Psychologists are now flagging cases where teens form emotional or romantic attachments to AI bots. In Hyderabad, clinicians report patients treating chatbots as confidants or partners, sometimes choosing AI over friendship, and escaping real-world challenges. The issue isn’t limited to India; it’s a global emotional bandwidth crisis.

When a digital bestie crosses the line

While the use of AI chatbots among teens continues to rise, it can also become unhealthy very quickly. While teens may subconsciously rely on chatbots for emotional support, these tools typically function in ways that cross professional boundaries or have total disregard for any boundary. The concern regarding chatbot use has been raised by a landmark study of the Centre for Countering Digital Hate (CCDH), where researchers impersonating vulnerable 13-year-olds tested the chatbot with worrying inquiries.

In total, the researchers conducted over 1,200 interactions with the AI program. Roughly half were flagged as harmful in various ways, ranging from explicit step-by-step instructions on self-harm and drug use to assistance in composing a suicide note.

This growing concern has given rise to a troubling new term: “chatbot psychosis.” In some cases, users—including teenagers—develop paranoia, delusions, or obsessive dependency on chatbots, believing them to be sentient confidants.

Experts echo concerns

In a conversation with ETV Bharat, Vineet Kumar, Founder of CyberPeace Foundation, said, “AI chatbots are becoming a part of everyday digital life, especially among teenagers, and we must address the growing concerns around emotional dependency and online safety. These tools are innovative, but are not designed to offer real companionship or psychological guidance. Young users often turn to chatbots for help with homework, managing exam stress, or navigating relationships. These are areas where emotional nuance and trust matter deeply.”

“Without proper understanding, there’s a risk of mistaking programmed responses for genuine connection, which has data privacy implications and can affect the psycho-social development of teenagers in the long term. This is why embedding AI, AI Safety, digital literacy and cyber resilience into school curricula is now a necessity. Knowing how to use these technologies also includes understanding their limitations and ethical boundaries,” he added.

Cybersecurity expert Amit Dubey warns bluntly, “AI chatbots have mutated from search engines to emotional companions. Teens use them and, even worse, they act on their advice.”

He added, “Recent reports show that nearly 60 per cent of teens in the US and Europe interact with AI chatbots regularly, some for up to 3–4 hours a day. We’ve seen worrying incidents where teens shared personal details or made misguided decisions based on chatbot conversations. In one tragic case in Belgium (2024), a 19-year-old student took his own life after prolonged emotional exchanges with an AI chatbot.”

“This is a clear warning; these tools are not designed to offer real psychological or moral guidance,” Dubey further said. “Globally, countries like the US, Singapore, and Australia have begun integrating AI and digital literacy into school curricula. India must act fast. Teens need to understand that AI tools are not human. They should be taught how to use them responsibly, question their outputs, protect their privacy, and recognise the risks.”

“AI is beginning to influence education, relationships, and even mental health. If we don’t prepare our youth with the right awareness, this powerful technology could do more harm than good,” he warned.

Cyber law expert Karnnika Seth told ETV Bharat, “Increased use of AI chatbots for advice on mental health, and online safety is positive only to the extent it is not substituted for professional advice. AI chatbots can give information, but mental health experts alone can render reliable professional advice. The terms of use and privacy of such AI chatbots should specifically contain this term in a clear manner.”

“AI has its own limitations, and one must remain mindful of data privacy concerns,” she added. “India has enacted the DPDP Act 2023, which will soon be brought into force. All AI chatbots must comply with its provisions. AI must be responsibly created, and ethical considerations must be built into all its stages, including its use. Children must be educated on the pros and cons of such use and online safety concerns.”

Anuj Aggarwal, Chairman, Centre for Research on Cyber Crime and Cyber Law, told ETV Bharat that AI collects vast amounts of information from the internet and uses it to generate responses. Some of these may be accurate, while others can be misleading or even harmful. When it comes to sensitive areas like education and health, it is crucial that only qualified human professionals are relied upon to provide answers.

These queries are not just data-driven—they require situational awareness and an understanding of social, religious, and political contexts, he said, adding further that only humans can analyse these factors appropriately and offer meaningful, workable solutions. AI simply cannot do that

Cyber law expert Sakshar Duggal said, “The growing reliance of teenagers on AI chatbots as emotional companions or advisors is definitely raising serious legal and ethical concerns. We are entering a digital age where issues like consent, mental health, and data protection need to be reconsidered in the context of human-AI interactions. It is crucial for schools to integrate AI and digital literacy into their regular curriculum as part of their essential education. This will empower young people to use AI responsibly, understand its limitations, and protect their privacy in an increasingly AI-driven world.”

Experts sound the alarm, not the panic button

Even major platforms struggle to regulate teen usage. Although OpenAI discourages users under 13, bypassing the restriction is as simple as entering a birthdate—just like that, anyone can gain access. Meanwhile, social apps like Instagram and TikTok are adding age-gating, but chatbots lag behind. Until regulations catch up, schools and parents must vigilantly teach digital boundaries.

According to Common Sense Media, while teens may enjoy chatting with AI, two-thirds still prefer real friends, and 80 per cent spend more time with human companions. Yet one statistic stands out: a third of teens discuss serious topics with AI instead of people—underscoring how easily chatbots are becoming emotional stand-ins.

Even OpenAI’s CEO, Sam Altman, acknowledges emotional overreliance. As he said, young users admit, “I can’t make any decision in my life without telling ChatGPT.” The company is now studying this emotional trend deeper, and developing break prompts for prolonged sessions.

So what should parents and educators do?

AI companions are here to stay. But they’re not friends, therapists, or moral compasses. When teens rely blindly, we risk shrinking empathy, creativity, and the messy humanity that makes us resilient. Experts suggest we:

For All Latest Updates

TAGGED:

Uttarakhand Flash Flood Ground Report: Dharali Inaccessible As Bridge Washed Away

Elderly Shopkeeper Beaten To Death Over Non-Veg Food Refusal In Rajasthan

CDS Formally Releases Declassified Versions Of Joint Doctrines For Cyberspace Operations & Amphibious Operations

Haven’t Received ECI Notice Over Two Voter IDs, Claims Tejashwi Yadav

NCERT Sets Up Panel To Examine Feedback Received About Content In New Textbooks

Passion, Hope And Celebration Of Life Defines India’s First Wheelchair Bound Band

Raksha Bandhan 2025: Bhubaneswar Woman Crafts Chocolate Rakhis Which Can Also Be Eaten

‘Goodbye, Red Letter Box’: India Post Has Decided To End Registered Postal Service, Find Out Why And Who Is Affected The Most

Exclusive | US Tariff To Have Limited Impact On India’s Economy But Rupee To Remain Under Pressure: Rajani Sinha

Copyright © 2025 Ushodaya Enterprises Pvt. Ltd., All Rights Reserved.